NodeJS for Data-Intensive Applications: Use Cases and Best Practices

Are you struggling to ensure optimal performance and scalability for your data-intensive Node.js applications in the face of ever-growing data volumes and complex processing demands?

Worry not, as we have the solution for you. By adopting effective monitoring and performance optimization practices, you can overcome these challenges and deliver high-performing, scalable, and reliable applications.

In this article, we will delve into the realm of monitoring and performance optimization in data-intensive Node.js applications. We will address the common issues faced by developers, such as sluggish response times, inefficient resource utilization, and difficulties in managing large datasets. By following a set of proven best practices, you can gain valuable insights into your application’s performance, identify and resolve bottlenecks, and ensure efficient resource utilization.

Join us on this journey as we explore the key strategies for monitoring and performance optimization. By implementing these practices, you can unlock the true potential of your data-intensive Node.js applications, deliver exceptional user experiences, and stay ahead in today’s data-driven world.

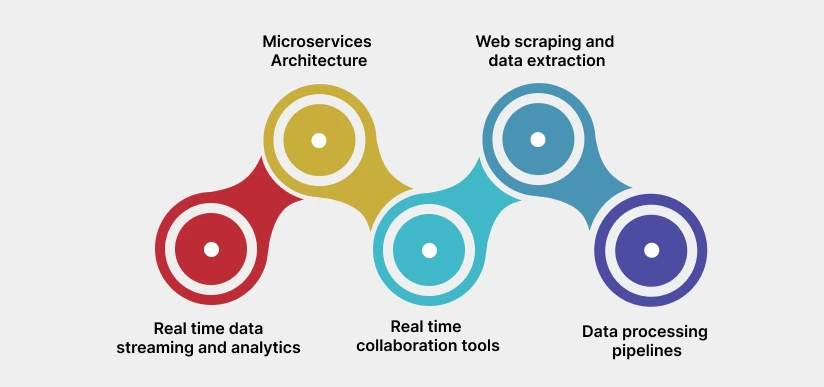

NodeJS for Data-Intensive Applications: Use Cases

Real-time Data Streaming and Analytics:

Node.js is well-suited for real-time data streaming and analytics applications, where data needs to be processed and analyzed as it arrives. With its event-driven architecture and non-blocking I/O operations, Node.js can efficiently handle multiple concurrent connections and process incoming data in real-time. Whether it’s processing real-time financial data, monitoring IoT devices, or building a real-time analytics dashboard, Node.js can provide low-latency and scalable solutions.

Microservices Architecture:

Data-intensive applications often adopt a microservices architecture to divide complex tasks into smaller, independent services. Node.js, with its lightweight and modular nature, is an excellent choice for building microservices. Each microservice can be built using Node.js, allowing for efficient data exchange between services using APIs or message queues. The non-blocking I/O operations of Node.js enable asynchronous communication between microservices, ensuring high performance and responsiveness.

Web Scraping and Data Extraction:

Many data-intensive applications rely on web scraping and data extraction to gather information from various sources. Node.js, coupled with libraries like Cheerio and Puppeteer, provides an excellent platform for building web scraping applications. The event-driven nature of Node.js allows for efficient handling of multiple requests, while libraries like Cheerio simplify parsing and extracting data from HTML documents. Node.js is particularly useful for scraping dynamic websites that require JavaScript execution.

Real-time Collaboration Tools:

Applications that require real-time collaboration, such as chat applications or collaborative document editing platforms, benefit from Node.js’s ability to handle concurrent connections and real-time updates. Node.js, along with frameworks like Socket.IO, enables bidirectional, event-driven communication between clients and servers. This makes it ideal for building interactive and real-time collaborative features, where data updates need to be reflected instantly to all connected users.

Data Processing Pipelines:

Node.js can be a valuable tool in building data processing pipelines, where large volumes of data need to be ingested, transformed, and stored. With the help of frameworks like Apache Kafka or RabbitMQ, Node.js can efficiently handle message queues, ensuring reliable data flow between different stages of the pipeline. Additionally, the asynchronous and non-blocking nature of Node.js allows for parallel processing of data, enabling high throughput and scalability.

Best Practices for NodeJS for Data-Intensive Applications:

Asynchronous Programming:

Node.js is built on an asynchronous, non-blocking I/O model, which is a fundamental aspect of its design philosophy. Asynchronous programming allows applications to handle multiple tasks concurrently without waiting for each operation to complete before moving on to the next one. Instead of blocking the execution and wasting valuable processing time, Node.js can initiate an I/O operation and continue executing other tasks. Once the operation completes, a callback function is triggered, allowing the application to handle the result.

Best Practices for Asynchronous Programming in Node.js:

Use Callbacks or Promises:

- Utilize callbacks to handle asynchronous operations in Node.js. Callbacks are functions that are executed when an operation completes or an event occurs.

- Promises provide a more structured and readable way to handle asynchronous operations. Promises encapsulate the result of an asynchronous operation, allowing you to chain multiple operations together and handle success or failure cases.

Avoid Blocking the Event Loop:

- Ensure that your code does not contain long-running or blocking operations that can hinder the event loop’s execution.

- Offload computationally intensive tasks or I/O operations to worker threads or separate processes to prevent blocking the event loop and maintain application responsiveness.

Leverage Async/Await:

- Async/await is a modern syntax introduced in JavaScript that simplifies asynchronous code by making it appear more synchronous.

- Use the async keyword to define a function as asynchronous and the await keyword to pause execution until an asynchronous operation completes.

- Async/await provides a more readable and structured way to write asynchronous code, avoiding callback nesting and enhancing code maintainability.

Error Handling:

- Handle errors appropriately in asynchronous code to prevent unhandled exceptions and crashes.

- Use try-catch blocks or error-first callbacks to capture and handle synchronous errors.

- For promises or async/await, use try-catch blocks or .catch() method to handle exceptions and handle errors gracefully.

Optimize I/O Operations:

- When performing I/O operations, such as reading from a file or making an API request, leverage the non-blocking nature of Node.js to initiate multiple operations concurrently.

- Use parallel execution techniques, such as Promise.all(), to execute multiple asynchronous operations simultaneously and maximize I/O throughput.

Manage Control Flow:

- Properly manage the flow of asynchronous operations by utilizing control flow libraries or patterns like async.js or the async/await syntax.

- Control flow libraries provide utilities to handle complex control flows in asynchronous code, ensuring proper sequencing of tasks and handling of dependencies.

Leverage Streams:

- Streams are a powerful feature in Node.js that allow for efficient processing of large amounts of data.

- Use streams for reading, transforming, or writing data in a chunked manner, reducing memory usage and enhancing performance.

- Streams are particularly useful when working with file I/O or network operations.

Caching and Memoization:

Caching and memoization are techniques used in data-intensive applications to improve performance by storing and reusing computed or frequently accessed data. These techniques help reduce the need for redundant computations and minimize response times, resulting in a more efficient application. Node.js provides various caching mechanisms that can be integrated into your data-intensive projects.

In data-intensive applications, certain operations or computations can be time-consuming and resource-intensive. Caching involves storing the results of these operations in memory or a dedicated cache system. Memoization, on the other hand, is a specific form of caching that stores the result of a function based on its input parameters. Both techniques aim to retrieve data or computations quickly without repeating the same expensive operations.

Best Practices for Caching and Memoization in Node.js:

Identify Cacheable Data:

- Analyze your application to identify data or computations that are expensive to generate but are frequently accessed or reused.

- Focus on data that does not change frequently or has a long expiration period, such as configuration settings, static content, or results of expensive database queries.

- Choose an Appropriate Cache Mechanism:

- Node.js offers various caching mechanisms, including in-memory caches like Redis or Memcached, or file-based caching.

- Consider factors such as data size, access patterns, expiration requirements, and deployment environment when selecting a caching solution.

Implement Cache Invalidation Strategies:

- Implement cache invalidation mechanisms to ensure that the cached data remains up-to-date and consistent with the source of truth.

- Use strategies like time-based expiration, versioning, or event-based invalidation to manage the cache’s freshness and prevent serving stale data.

Use Cache-Control Headers:

- When serving cached data over HTTP, utilize cache-control headers to control how intermediate proxies or browsers cache the response.

- Set appropriate max-age, must-revalidate, or no-cache headers to instruct clients on caching behavior and prevent serving outdated data.

- Cache at the Appropriate Granularity:

- Determine the optimal granularity for caching based on the characteristics of your application.

- Consider caching at various levels, such as page-level caching, component-level caching, or database query-level caching, depending on the specific use case.

Monitor and Measure Cache Performance:

- Implement monitoring and logging to track cache hits, misses, and overall cache performance.

- Analyze cache hit ratios, response times, and eviction rates to identify potential bottlenecks and optimize cache utilization.

Consider Cache Size and Eviction Policies:

- Be mindful of cache size limitations, especially in memory-based caches, to prevent memory exhaustion or excessive cache evictions.

- Select appropriate eviction policies like LRU (Least Recently Used) or LFU (Least Frequently Used) to ensure the cache retains the most relevant and frequently accessed data.

Memoize Expensive Function Calls:

- Identify computationally expensive functions that have deterministic outputs based on their input parameters.

- Implement memoization techniques to store the function’s results based on its input parameters, avoiding redundant function calls for the same inputs.

- Test and Validate Cached Results:

- Validate the correctness of the cached results by periodically testing against the source of truth.

- Implement automated tests and validation mechanisms to ensure that the cache is serving accurate and up-to-date data.

Error Handling and Logging:

Error handling and logging are crucial aspects of building robust and maintainable data-intensive applications. Properly handling errors ensures that your application can gracefully recover from unexpected situations and provide meaningful feedback to users or administrators. Logging helps capture important events, error details, and performance metrics, aiding in debugging, monitoring, and improving application performance.

Error Handling Best Practices:

Centralized Error Handling:

- Implement a centralized error-handling mechanism to capture and handle errors consistently throughout your application.

- Use middleware or error-handling middleware in Node.js frameworks like Express to centralize error-handling logic.

Use Meaningful Error Messages:

- Provide clear and meaningful error messages to users or clients when exceptions or errors occur.

- Include relevant information about the error, its context, and potential solutions to assist users in understanding and resolving the issue.

Graceful Error Recovery:

- Implement appropriate error recovery mechanisms to handle recoverable errors and avoid application crashes.

- Use try-catch blocks or error-first callbacks to catch and handle synchronous errors effectively.

- Utilize promises or async/await constructs to handle asynchronous errors and ensure proper error propagation.

Custom Error Classes:

- Define custom error classes that inherit from the built-in Error object to create a hierarchy of specific error types.

- Custom error classes help differentiate between different types of errors, making it easier to handle specific error scenarios.

Error Logging:

Implement a robust logging system to capture important error events, warnings, and other application-specific information.

Log error stack traces, error messages, timestamps, and contextual data to facilitate troubleshooting and debugging.

Logging Best Practices:

Choose an Appropriate Logging Framework:

- Select a logging framework or library that best suits your application’s requirements and supports the desired logging levels, formats, and destinations.

- Popular Node.js logging frameworks include Winston, Bunyan, and Pino.

Define Logging Levels:

- Define different logging levels, such as error, warning, info, debug, and trace, to categorize log messages based on their importance or severity.

- Use the appropriate logging level to ensure that log messages provide relevant information without cluttering the log output.

Log Contextual Information:

- Include contextual information in log messages to provide additional context and aid in troubleshooting.

- Log relevant request/response data, user information, session IDs, or any other data that helps understand the context of the log event.

Log Performance Metrics:

- Log important performance metrics, such as response times, database query durations, or resource utilization, to monitor and optimize application performance.

- Use tools like Node.js’s built-in perf_hooks or third-party libraries to measure and log performance metrics.

Log Rotation and Retention:

- Implement log rotation and retention policies to manage log files efficiently and prevent disk space issues.

- Consider compressing or archiving older log files while ensuring that log retention meets any regulatory or compliance requirements.

Integration with Monitoring Systems:

- Integrate your logging system with monitoring tools or frameworks to aggregate and analyze log data.

- Centralized log management systems like ELK Stack (Elasticsearch, Logstash, and Kibana) or Splunk can help with log analysis and monitoring.

Use Streams for Efficient Data Processing:

In Node.js, streams are a powerful feature that allows for efficient and scalable data processing. Streams provide a way to handle data in chunks or chunks of data, rather than loading the entire dataset into memory at once. This chunked processing enables applications to process data incrementally, reducing memory consumption and improving overall performance. Streams can be used for various tasks such as reading and writing files, network communication, and data transformation.

Streams work by breaking data into manageable chunks, which are processed or passed through a pipeline of operations. Each chunk of data is processed as it becomes available, instead of waiting for the entire dataset to be loaded into memory. This approach is particularly useful when dealing with large files, network communication with low latency, or real-time data processing scenarios.

The best practices for using streams in Node.js:

Use Appropriate Stream Types:

- Node.js provides different types of streams, such as Readable, Writable, Transform, and Duplex streams.

- Select the appropriate stream type based on the nature of your data and the processing requirements.

Piping Streams:

- Utilize the pipe() method to connect streams together, allowing data to flow seamlessly from one stream to another.

- Piping simplifies the handling of data transformation or transferring data between different sources or destinations.

Handle Errors and Event Handlers:

- Register error handlers and event handlers to handle errors, data events, and completion events.

- Proper error handling ensures that errors during data processing are captured and handled appropriately.

Use Chunk Size Optimization:

- Experiment with different chunk sizes to optimize data processing performance.

- Smaller chunk sizes may result in more frequent I/O operations but can improve response times and reduce memory usage.

Implement Stream Back-Pressure:

- Implement back-pressure handling mechanisms to prevent overwhelming system resources when processing data.

- Techniques such as flow control, rate limiting, or buffering can help maintain a balance between data production and consumption.

Utilize Transform Streams:

- Transform streams allow for data transformation during stream processing.

- Leverage Transform streams to modify, filter, or aggregate data as it passes through the stream pipeline.

Use Stream Libraries or Modules:

- Explore third-party stream libraries or modules that provide additional functionalities or utilities for stream-based processing.

- Libraries like highland, through2, or pumpify offer enhanced stream processing capabilities.

Test and Optimize Stream Performance:

- Perform thorough testing to ensure that stream processing meets performance expectations.

- Analyze and optimize stream performance by profiling and benchmarking different stream configurations and processing scenarios.

Security:

Ensuring the security of data-intensive applications is of utmost importance to protect sensitive information, prevent unauthorized access, and maintain the integrity of the system. Data breaches and security vulnerabilities can have severe consequences, including financial loss, damage to reputation, and legal implications. Therefore, it is essential to follow best practices to enhance the security of your Node.js applications.

Here are some best practices for securing data-intensive applications:

Input Validation:

- Validate and sanitize all user input, including form data, query parameters, and request bodies.

- Implement server-side input validation to prevent common security vulnerabilities like SQL injection, cross-site scripting (XSS), and command injection attacks.

Secure Authentication and Authorization:

- Implement secure authentication mechanisms such as password hashing, salting, and encryption.

- Use strong password policies and enforce multi-factor authentication (MFA) where applicable.

- Implement role-based access control (RBAC) to enforce proper authorization and limit access to sensitive data or functionality.

Protect against Cross-Site Scripting (XSS) Attacks:

- Use output encoding or escaping techniques to prevent XSS attacks.

- Avoid using user-generated content directly in HTML templates or dynamic script execution contexts.

Prevent SQL Injection:

- Use parameterized queries or prepared statements to avoid SQL injection attacks.

- Avoid constructing SQL queries by concatenating user input directly into query strings.

Implement Secure Session Management:

- Use secure session management techniques such as session IDs with strong entropy, session expiration, and secure cookie settings.

- Implement measures to protect against session hijacking, session fixation, and session replay attacks.

Protect against Cross-Site Request Forgery (CSRF) Attacks:

- Implement CSRF protection mechanisms such as anti-CSRF tokens or same-site cookie attributes.

- Validate the origin and referer headers to ensure requests originate from trusted sources.

Secure Data Storage:

- Use encryption techniques to secure sensitive data at rest, such as user credentials, personally identifiable information (PII), or financial data.

- Employ appropriate encryption algorithms and key management practices.

Implement Transport Layer Security (TLS):

- Use TLS/SSL certificates to encrypt data transmitted over the network.

- Implement HTTPS for secure communication between clients and the server.

Keep Dependencies Updated:

- Regularly update and patch dependencies to mitigate security vulnerabilities.

- Use package management tools to track and manage dependencies effectively.

Implement Security Monitoring and Logging:

- Implement logging and monitoring mechanisms to detect and respond to security incidents.

- Monitor access logs, error logs, and application-level security events.

- Implement security information and event management (SIEM) systems to aggregate and analyze security-related logs.

Regular Security Audits and Penetration Testing:

- Conduct regular security audits and penetration testing to identify vulnerabilities and weaknesses in the application.

- Use automated scanning tools, manual code reviews, and ethical hacking techniques to assess the security posture of your application.

Follow Security Best Practices:

- Stay updated with security best practices and guidelines provided by reputable sources such as OWASP (Open Web Application Security Project).

- Implement secure coding practices, such as input validation, output encoding, and the least privilege principle.

Monitoring and Performance Optimization:

Monitoring and optimizing the performance of data-intensive applications are crucial tasks to ensure their efficient operation, scalability, and responsiveness. By implementing effective monitoring strategies and optimizing performance, you can identify and resolve bottlenecks, improve resource utilization, and provide a smooth user experience. Here are some best practices for monitoring and performance optimization in Node.js applications:

Define Performance Metrics:

- Identify key performance metrics specific to your application, such as response time, throughput, error rates, and resource utilization.

- Establish benchmarks and goals to measure and track the performance of your application over time.

Implement Logging and Error Handling:

- Use a centralized logging system to capture relevant information about application behavior, errors, and exceptions.

- Implement proper error handling and error reporting mechanisms to identify and address issues proactively.

Monitor System Resources:

- Monitor and track system resources like CPU usage, memory consumption, disk I/O, and network traffic.

- Use monitoring tools and utilities to gain insights into resource usage patterns and identify any potential bottlenecks.

Application Performance Monitoring (APM):

- Utilize APM tools to monitor the performance and health of your application in real time.

- APM tools provide detailed insights into request processing, database queries, external API calls, and other critical components.

Load Testing:

- Conduct load testing to simulate high-traffic scenarios and evaluate the performance and scalability of your application.

- Use load testing tools to measure response times, throughput, and resource utilization under different load conditions.

Caching and Memoization:

- Implement caching strategies to reduce the load on the database or external APIs.

- Identify frequently accessed or computationally expensive data and cache the results for faster access.

Continuous Performance Monitoring:

- Implement continuous monitoring of performance metrics and analyze trends over time.

- Set up alerts and notifications to proactively detect and respond to performance degradation or anomalies.

Wrapping it Up:

Node.js has proven to be a powerful platform for building data-intensive applications, offering scalability, real-time capabilities, and efficient data processing. By leveraging its event-driven and non-blocking I/O nature, developers can create robust, high-performance applications that can handle large volumes of data and concurrent connections. Whether you’re building real-time analytics dashboards, web scraping applications, or data processing pipelines, Node.js provides a solid foundation for your data-intensive projects. By following the best practices outlined in this blog, you can ensure the efficiency, scalability, and reliability of your Node.js applications in the data-intensive landscape.

Want more information about our services?

Similar Posts

Developing Cross-Platform Mobile Apps with React Native and Its Advantages

In the rapidly evolving world of mobile app development, the need to deliver seamless user experiences across different platforms is paramount. Building separate native apps for iOS and Android can be time-consuming, costly, and resource-intensive. Enter React Native, a powerful framework that offers a solution to this problem. In this blog, we will explore the […]...

10 Best NodeJS Frameworks for Building Robust Web and Mobile Applications

Are you grappling with the challenges of web and mobile app development, yearning for effective solutions to build robust and scalable applications that offer exceptional user experiences? Look no further. The answer lies within the realm of Node.js and its powerful arsenal of frameworks. Imagine yourself faced with a deadline, entrusted with creating a web […]...

Tech Tools for Business Efficiency: Software Solutions for Every Need

Are you struggling to keep up with the ever-evolving demands of your business? In today’s fast-paced world, staying ahead of the competition requires more than just hard work and dedication. It demands efficiency, adaptability, and smart decision-making. Thankfully, in this digital age, technology offers a myriad of solutions to help businesses thrive. From project management […]...